How AI Improves Texture Mapping for Artifacts

How AI Improves Texture Mapping for Artifacts

AI is transforming how we digitally restore artifacts, making the process faster, more precise, and scalable. Traditional manual methods are slow, labor-intensive, and prone to errors, often taking years to restore a single artifact. AI tools address these challenges by automating damage detection, texture reconstruction, and 3D mapping with sub-millimeter accuracy.

Key insights from the article:

- Speed: AI can restore textures in hours compared to weeks or years with manual methods. For example, a 15th-century painting was digitally restored in just 3.5 hours.

- Precision: AI detects microscopic details like cracks and faded pigments that human eyes often miss. Techniques like GANs and diffusion models ensure textures align perfectly with the artifact's structure.

- Scalability: AI handles large collections efficiently, helping museums digitize and restore thousands of artifacts that would otherwise remain inaccessible.

- Reversibility: Digital restoration is non-invasive and fully reversible, preserving the original artifact while providing a visually restored version.

AI tools like "MurFact AI" and methods used by institutions like the Rijksmuseum and Vatican demonstrate the potential to preserve historical artifacts with unmatched accuracy and efficiency. By bridging the gap between technology and preservation, AI ensures that these treasures remain accessible for future study and appreciation.

Problems with Manual Texture Mapping for Artifacts

High Time and Labor Requirements

Manual texture mapping is an incredibly time-consuming and labor-intensive process. It requires meticulous human effort to analyze every surface detail, making it impractical for large collections. This approach can take months for a single artifact, let alone thousands.

Take the example of Dr. Alessandro Launaro, a Senior Lecturer in Classics at the University of Cambridge. Back in 2015, he encountered what he described as a "significant challenge" while analyzing thousands of Roman pottery fragments excavated from western Italy. His team needed to catalog around 6,000 pottery profiles to study trade and settlement patterns. Professor Carola-Bibiane Schönlieb, who collaborated on the project, emphasized that this manual process was not only tedious but also prone to errors that algorithms could avoid. By the end of 2020, the team shifted to MATLAB-based deep learning to automate what had become an overwhelming task for manual methods.

This reliance on manual labor creates bottlenecks in restoration projects, leaving many artifacts inaccessible to the public. For instance, about 70% of paintings in institutional collections remain out of public view, largely because the high costs and time demands of manual conservation create massive backlogs. The situation worsens when dealing with complex deterioration issues like peeling paint, mold, cracks, or large missing sections. These challenges require extreme precision to preserve the original artistic style, adding another layer of difficulty. Moreover, the limitations of acquisition technologies often hinder manual methods from accurately replicating historical craftsmanship.

Accuracy Issues in Reconstructing Damaged Textures

Besides being slow and labor-intensive, manual texture mapping often falls short in accuracy. Restorers face challenges in achieving the precision needed to faithfully reconstruct textures on fragmented or eroded artifacts. Human limitations, such as inconsistencies in motor control and color perception, frequently result in uneven or imprecise restorations. Worse yet, the process is irreversible - once materials are applied to an artifact, correcting mistakes without causing further damage is nearly impossible.

A striking example of these accuracy challenges comes from the Tirsted Church in Denmark. During the fourth re-restoration of its Gothic wall paintings in 2000, conservators had to remove nearly 50% of the non-original elements added during previous manual restoration attempts. These additions were deemed inaccurate and deviated from the artifact's authenticity. Efforts to restore original details can inadvertently compromise the artifact's integrity.

Traditional methods for copying murals often rely on ancient texts and subjective interpretations of fading patterns. This approach is not only time-consuming but also risks secondary damage to fragile artifacts due to repeated site visits. The Venice Charter underscores the importance of ensuring replacements blend harmoniously with the original while remaining distinguishable. Unfortunately, manual methods frequently fail to strike this balance, risking the falsification of artistic or historical evidence.

How AI Improves Texture Mapping

AI has transformed the traditionally tedious and error-prone process of manual texture mapping by automating key steps like detection, reconstruction, and application. With its ability to analyze and process data in seconds, AI delivers textures that seamlessly integrate with original surfaces while maintaining historical accuracy. Let’s explore how AI enhances each phase of texture mapping - from identifying damage to generating and applying textures.

AI-Based Damage Detection and Texture Analysis

AI leverages Context Encoders to perform semantic inpainting, a technique that predicts and recreates missing sections of textures with impressive historical fidelity.

By analyzing damage from multiple perspectives, AI can classify regions based on the severity of degradation. This goes far beyond surface-level examination - AI assesses details like 3D positions, surface normals, and geodesic distances (which account for surface curvature and proximity) to ensure reconstructed textures align perfectly with the artifact's physical structure.

The scale of these capabilities is staggering. After the 2019 Notre Dame Cathedral fire, Livio De Luca led a team using AI-powered photogrammetry and deep learning to analyze over 14,000 manual annotations, automatically identifying complex architectural forms and signs of degradation. Similarly, in November 2024, the Vatican collaborated with Microsoft and Iconem to create a digital twin of St. Peter's Basilica. Using over 400,000 high-resolution images, AI was able to visualize the structure in fine detail and identify potential structural risks.

"Deep learning approaches help us today to introduce new ways for correlating multi-scale and multi-temporal observations more effectively, saving considerable time for specialized works." - Livio De Luca, Research Director, French National Centre for Scientific Research

Once damage is identified with precision, generative models step in to reconstruct textures that are both realistic and historically accurate.

Generative Models for Realistic Texture Reconstruction

Generative Adversarial Networks (GANs) play a pivotal role in texture reconstruction. Using a generator-discriminator framework, GANs can predict and fill in missing or damaged sections by analyzing surrounding areas. For example, Context Encoders - a specialized type of GAN - have been used to restore eroded or destroyed details in historic sites like Angkor Wat and Rome's Forum.

Modern diffusion models, such as Stable Diffusion, take things a step further. These systems can generate textures from simple text prompts while incorporating tools like ControlNet to ensure the textures align with the artifact's depth and edge contours. The Make-A-Texture framework demonstrates the speed and efficiency of these models, synthesizing high-resolution texture maps in just 3.07 seconds on a single NVIDIA H100 GPU.

For projects requiring intricate detail, specialized GANs like ScaleSpaceGAN excel. By using progressive Fourier features, these models capture details across vastly different scales. In July 2024, researchers at the Max-Planck-Institut für Informatik demonstrated how this technology could zoom in 256 times on a painting - revealing everything from the overall composition to individual cracks in the oil paint. Remarkably, this approach requires 885 times fewer parameters than the total pixel values of an equivalent gigapixel image.

"AI-powered tools analyze damaged artifacts, predicting and filling in missing sections based on similar pieces or historical records." - Bob Hutchins, Founder and President, Human Voice Media

AI Integration in 3D Texture Mapping

AI doesn’t stop at reconstruction - it also enhances the full 3D representation of artifacts. Transformer-based architectures can predict 3D texture fields from a single reference image and a mesh, producing high-quality textures in just 0.2 seconds per shape. This eliminates the need for the labor-intensive manual creation of UV maps.

AI typically processes textures in three stages: ensuring overall consistency, refining details, and filling in missing areas. To prevent issues like "texture bleeding" and to preserve small geometric details, models use ControlNet signals with depth and lineart conditioning.

Beyond simple RGB coloring, AI now incorporates Large Language Models (LLMs) like GPT-4V to analyze artifact segments and recommend appropriate materials. For instance, it might suggest fabric for cushions or ceramic for pottery, then retrieve matching parametric materials from a database. This ensures artifacts remain editable and relightable. For artifacts with severe damage, Multi-Scale Upsampling GANs (MU-GAN) generate dense point cloud patches to fill gaps caused by erosion or breakage.

Step-by-Step Guide: Using AI for Texture Mapping

Step 1: Capture High-Resolution Artifact Data

Start by collecting high-resolution data with equipment suited to the artifact you're working on. For general objects, a high-resolution digital SLR camera, such as the Nikon D90 with fixed focal length lenses, is ideal for photogrammetry. For smaller artifacts (less than 0.6 inches), macro photography is essential to capture fine details and avoid shadows. When working with large sites or landscapes, drones offer the best way to capture aerial perspectives.

To achieve sub-millimeter accuracy, combine laser scanning with photogrammetry. Tools like the FARO Edge CMM arm or Konica Minolta Vivid 9i provide precise geometric data, while photogrammetry adds high-resolution textures.

"Laser scanned data is among the most accurate geometrical data available in modern scanning techniques, but it lacks in its ability to accurately capture textures and diagnostic coloration information." - Christopher Dostal, Researcher, Texas A&M University

For more intricate needs, Reflectance Transformation Imaging (RTI) captures detailed surface geometry and texture under varying light conditions. After collecting your raw photos or videos, use AI-powered software like Artec Studio (priced at $48–$141 per month) or Agisoft PhotoScan to process the data and build 3D models.

Once you've gathered the high-resolution data, let AI help you identify damaged areas.

Step 2: Preprocess with AI Damage Detection

AI can pinpoint damaged or missing areas in your data with impressive precision. Modern models use attention-based region detection through Local Fusion Modules (LFM). These modules map descriptive words to specific regions in an image, allowing the AI to focus on areas that need restoration. For artifacts with significant surface loss, you can provide text-based metadata or sketches as a "semantic prior" to guide the AI.

The AI works by comparing degraded samples with clean reference samples in a perceptual quality space, helping it distinguish between actual damage and the artifact's intended texture. For physical items like ceramics, deep learning models use semantic inpainting to identify missing elements and generate a visual counterpart for 3D reconstruction. Additionally, specialized diffusion models like UVHD remove fixed lighting effects from photographs, ensuring textures can be accurately re-lit in digital environments.

Studies have shown that AI-guided artifact reduction is highly effective. In fact, more than 90% of users preferred AI-driven results for their visual quality and coherence. Advanced transformer-based frameworks can even predict a 3D texture field from a single image and mesh in just 0.2 seconds per shape.

Accurate damage detection is a crucial step in preserving the artifact's original integrity.

Step 3: Generate Textures Using AI Models

To recreate textures, specialized GANs (Generative Adversarial Networks) like ScaleSpaceGAN excel at capturing both overall composition and intricate details. For artifacts with severe damage, Multi-Scale Upsampling GANs (MU-GAN) can generate dense point cloud patches to fill gaps caused by erosion or breakage.

Step 4: Apply AI to Map Textures in 3D

AI can transform 2D images into 3D point clouds using depth-map backprojection, which identifies geometric inconsistencies and ensures textures align accurately with the artifact's physical structure. Specialized models detect incomplete areas and remove illumination artifacts before final texture mapping. Models trained on datasets like Objaverse, which includes approximately 800,000 textured 3D assets, can handle a wide variety of object categories.

After mapping, refine and validate the textures for both historical accuracy and visual fidelity.

Step 5: Refine and Validate Results

Validation is key to ensuring the final textures are accurate. Use metrics like CLIP-Score, LPIPS, and PSNR to confirm quality, and have experts review the results. Tools like Multi-ControlNet with Canny, Depth, Tile, and OpenPose layers help preserve edges and 3D structure. If edges appear distorted, adjust Canny ControlNet weights to 0.6. For historical portraits, use "BakedVAE" to prevent unnatural color shifts and apply a "Face Detailer" node to sharpen blurry features.

A notable example of AI-driven restoration comes from June 2025, when Alex Kachkine at MIT digitally restored a 15th-century oil painting. Using scans from institutions like the Museo Nacional del Prado and the Met, Kachkine identified 5,612 losses across 66,205 mm² and applied a color-accurate bilayer laminate mask in just 3.5 hours, achieving remarkable precision.

By combining high-precision laser-scanned geometry with AI-generated textures, you can correct issues like "mottled" surfaces or mesh inaccuracies common in standalone photogrammetry. High-resolution scans with a 0.2 mm grid ensure detailed and accurate representation.

This validation process ensures that AI-enhanced textures honor the artifact's historical context while meeting restoration standards.

sbb-itb-e44833b

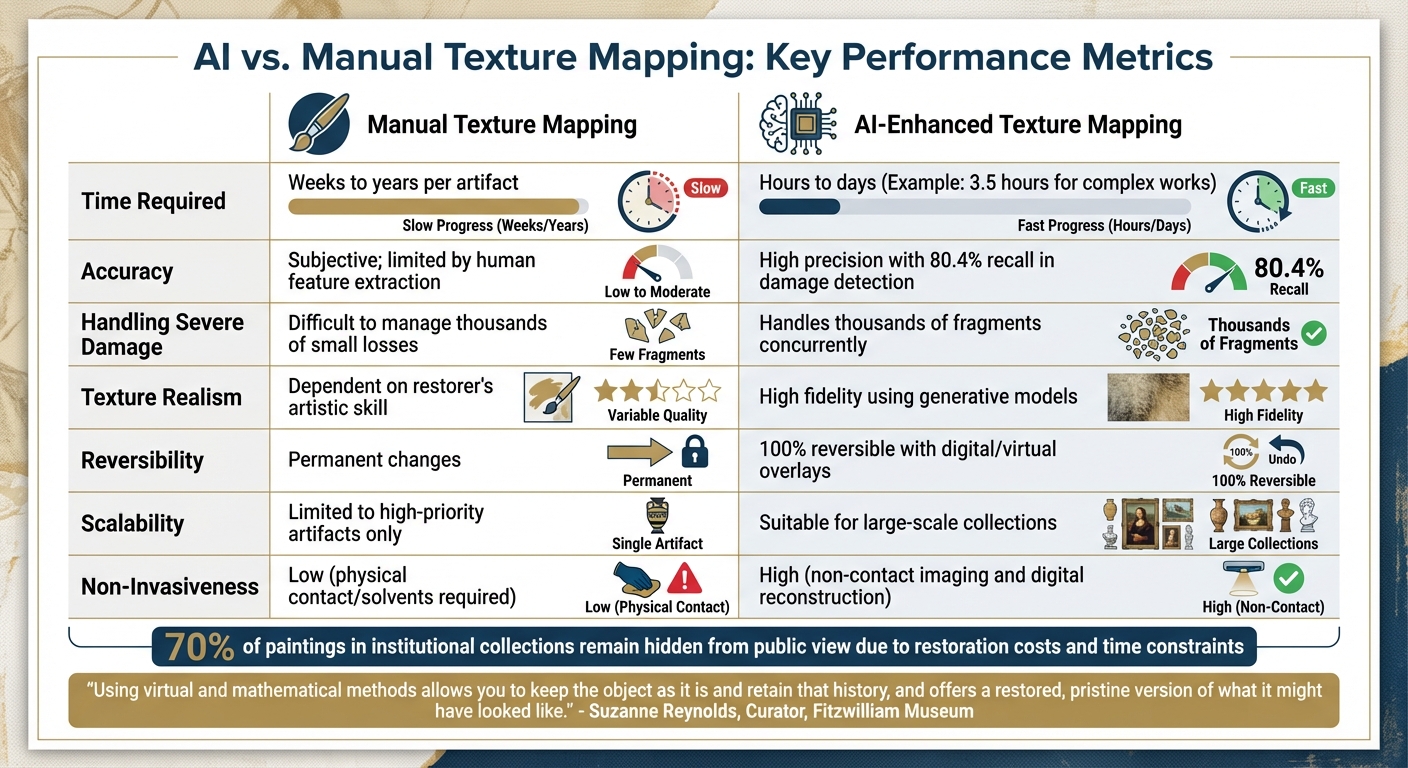

AI vs. Manual Texture Mapping Methods

AI vs Manual Texture Mapping for Artifact Restoration: Speed, Accuracy, and Scalability Comparison

Building on the advancements in AI processes we've discussed, it's time to compare how AI stacks up against traditional manual texture mapping methods.

Key Metrics for Comparison

When assessing AI alongside manual techniques, several metrics highlight the differences in performance. These include:

- Time Efficiency: How quickly each method can complete a restoration project.

- Accuracy: The ability to recreate textures that closely resemble the original artifact, often measured with tools like SSIM (Structural Similarity Index) and PSNR (Peak Signal-to-Noise Ratio).

- Reversibility: Whether changes can be undone without affecting the physical artifact.

- Scalability: The capacity to manage extensive collections or artifacts with significant damage.

- Texture Realism: How convincingly the restored surface mirrors the artist's original style.

These metrics are crucial for digital heritage preservation, ensuring that restored artifacts remain historically faithful while becoming more accessible to the public.

Manual restoration often faces challenges, particularly when dealing with intricate patterns or cultural motifs. Extracting and replicating these details can be incredibly difficult to quantify or reproduce. On the other hand, AI tools like Stable Diffusion paired with ControlNet excel in structural control over decorative elements. For instance, the ARTDET machine-learning software achieved an impressive 80.4% recall rate in detecting missing paint and prior repairs in digitized paintings - capturing details that might escape the human eye.

This efficiency could help museums and institutions bring to light the estimated 70% of paintings currently hidden from public view due to damage. The table below provides a clear comparison of these metrics, showcasing the advantages of AI in texture mapping.

Comparison Table

| Metric | Manual Texture Mapping | AI-Enhanced Texture Mapping |

|---|---|---|

| Time Required | Weeks to years per artifact | Hours to days (e.g., 3.5 hours for complex works) |

| Accuracy | Subjective; limited by human feature extraction | High; 80.4% recall in damage detection |

| Handling Severe Damage | Difficult to manage thousands of small losses | Handles thousands of fragments concurrently |

| Texture Realism | Dependent on the restorer's artistic skill | High fidelity using generative models |

| Reversibility | Permanent changes | 100% reversible (digital/virtual overlays) |

| Scalability | Limited to high-priority artifacts | Suitable for large-scale collections |

| Non-Invasiveness | Low (physical contact/solvents required) | High (non-contact imaging and digital reconstruction) |

AI's non-invasive techniques not only preserve the historical integrity of an artifact but also provide a digitally restored version for public appreciation. As Suzanne Reynolds, Curator at the Fitzwilliam Museum, aptly puts it:

"Using virtual and mathematical methods allows you to keep the object as it is and retain that history, and offers a restored, pristine version of what it might have looked like. It gives you the opportunity to have the best of both worlds."

AI Applications in Digital Artifact Preservation

AI is making waves in the world of artifact restoration, offering innovative ways to preserve and restore damaged historical pieces. From frescoes to sculptures, these case studies highlight how AI tools are transforming restoration efforts - both digitally and physically - while keeping the original artifacts intact.

Case Study: Restoring Damaged Historical Frescoes

Back in December 2015, researchers took on the challenge of piecing together the fragmented frescoes from St. Trophimena Church in Salerno, Italy, using a system called MOSAIC+. The church’s artwork had crumbled into countless fragments, making physical reassembly far too risky. The AI-powered MOSAIC+ system cataloged the fragments by their color, shape, and texture, allowing experts to virtually match the pieces without ever physically handling them.

The system also tackled craquelure (fine cracks on the surface) using mathematical morphology to digitally fill in these gaps. This approach resulted in a digitally restored version of the frescoes, which visitors could view in a museum setting.

"The fragments can be left untouched and manipulated virtually to find out the best combination before proceeding with the actual reconstruction".

Fast forward to more recent efforts, researchers at the Dunhuang Academy used a cutting-edge tool called PGRDiff to restore ancient murals at the Mogao Grottoes. This tool worked with text prompts like "restore the mural from the Tang Dynasty" to address ten different types of damage, such as salt crystallization and insect infestations. Unlike traditional methods that might obscure damaged areas entirely, PGRDiff treated the damage as a semi-transparent layer, preserving the underlying textures that could otherwise be lost.

Case Study: Virtual Reassembly of Fragmented Sculptures

AI has also proven invaluable in reassembling fragmented sculptures. In August 2008, a team led by Benedict J. Brown at Princeton University developed a system to piece together the shattered Theran wall paintings, ancient frescoes broken into thousands of fragments. Using range scans and automated 3D matching algorithms, the system aligned pieces based on their geometric features. Impressively, a single non-expert could scan at least 10 fragments per hour, significantly accelerating the process of digitization.

Another groundbreaking example occurred in June 2025, when Alex Kachkine at MIT restored a 15th-century oil-on-panel painting attributed to the Master of the Prado Adoration. By analyzing 57,314 colors, Kachkine’s AI system identified 5,612 areas of loss across 66,205 mm² (roughly 102.6 square inches). The AI then created a color-accurate digital mask, which was used to produce a bilayer laminate that could be physically applied to the painting. The entire process took just 3.5 hours, an astonishing 66 times faster than traditional manual inpainting techniques.

"The infill process took 3.5 h, an estimated 66 times faster than conventional inpainting, and the result closely matched the simulation".

These examples show how AI-driven restoration methods aren't just about speed - they're enabling projects that would have been impossible before. By combining precision, efficiency, and respect for historical integrity, AI is reshaping the way we preserve and experience cultural heritage.

Conclusion

AI is revolutionizing texture mapping in artifact restoration by tackling the key challenges of traditional manual methods. What once took days or weeks can now be completed in just 3.5 hours - an astonishing 66 times faster than conventional approaches. This breakthrough makes restoration projects more accessible and cost-effective, especially for institutions where nearly 70% of paintings remain in storage due to prohibitive restoration expenses.

But speed isn't the only game-changer here. AI systems bring remarkable accuracy to the table. For instance, DC-CycleGAN improves the Frechet Inception Distance by an impressive 52.61% compared to earlier methods. These systems can analyze thousands of colors, detect subtle damage patterns invisible to the human eye, and reconstruct missing details with precision.

"This approach grants greatly increased foresight and flexibility to conservators, enabling the restoration of countless damaged paintings deemed unworthy of high conservation budgets." – Alex Kachkine, Department of Mechanical Engineering, MIT

Looking ahead, AI's role in heritage preservation extends far beyond restoration. Algorithms are paving the way for preventive conservation by predicting deterioration before it becomes noticeable. Tools like digital twins and virtual restoration environments allow researchers to experiment with conservation techniques without putting physical artifacts at risk. As Professor Francesco Colace highlights, AI enables non-invasive analysis of artifacts, "leading to substantial cost reductions in the medium and long term".

FAQs

How does AI help restore artifacts while maintaining historical accuracy?

AI is transforming artifact restoration by studying authentic reference materials like high-resolution images of period-specific art and objects. With tools such as Generative Adversarial Networks (GANs), these systems learn to replicate the distinct colors, textures, and patterns of a particular era. When portions of an artifact are missing or damaged, AI creates virtual reconstructions that blend seamlessly with the original design. Importantly, these digital adjustments are applied as reversible overlays, preserving the artifact's integrity.

To maintain precision, AI-generated suggestions are carefully reviewed by historians and conservators. They cross-reference these proposals with archival records and material analyses to ensure historical accuracy. Additionally, multi-spectral imaging plays a crucial role by detecting cracks, fading, or later modifications, helping AI differentiate between original details and damage. This thoughtful integration of technology and expert oversight ensures restorations are visually cohesive and historically accurate.

What are the key benefits of using AI for artifact restoration compared to traditional methods?

AI brings a host of benefits to artifact restoration that traditional manual methods simply can't match. One of the standout advantages is speed. With AI, digital repairs can be completed in just minutes - a process that would take hours, or even days, if done manually. This rapid turnaround not only cuts costs but also makes it feasible to restore entire collections that might otherwise be sidelined due to limited budgets.

Another key benefit is precision. AI uses advanced algorithms, trained on vast datasets, to reconstruct textures with incredible accuracy. This means AI can recreate realistic textures without relying on time-intensive manual techniques like UV mapping. And because the process is entirely non-invasive, restored images are applied digitally - through overlays or displays - ensuring the physical artifact remains untouched and intact.

AI also improves accessibility by making high-quality restoration possible for artifacts that might not typically receive attention, such as lesser-known or lower-value pieces. This ensures more cultural treasures are preserved and shared with broader audiences, giving them the appreciation they deserve.

Can AI be used for restoring both single artifacts and large museum collections?

AI-driven texture mapping and restoration techniques are revolutionizing artifact preservation. These tools are flexible enough to handle everything from restoring a single artifact to managing entire museum collections. Plus, they scale effortlessly, ensuring precise work on individual items while maintaining consistency across thousands of pieces.

This shift is reshaping the way museums and preservation experts tackle restoration, streamlining the process to be faster, more precise, and practical for collections of any size.